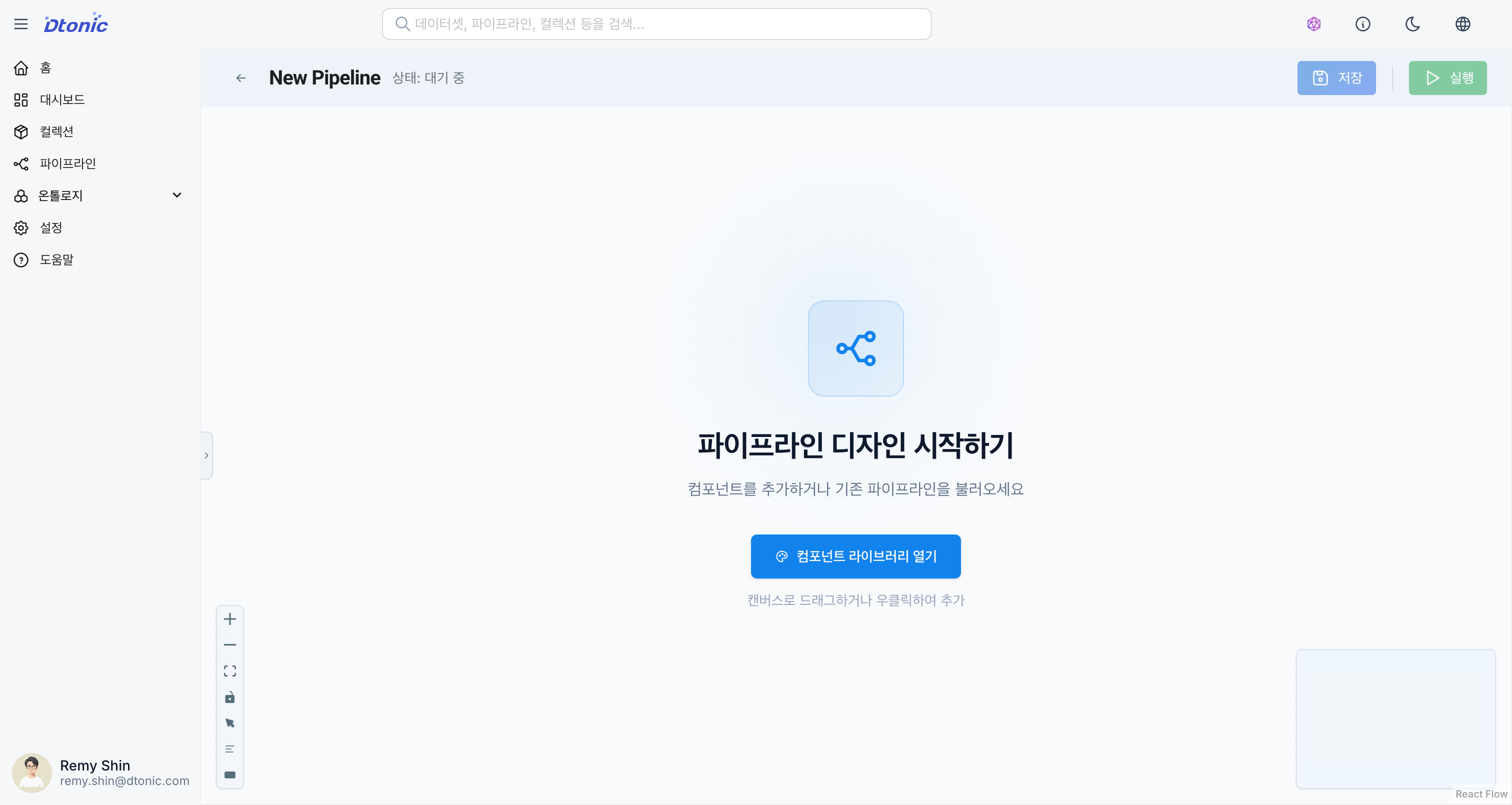

Adding and Connecting Nodes

This section explains how to add nodes that compose a workflow and connect data flows.

How to Add Nodes

1. Quick Add

[Screenshot] Adding Nodes (Quick Add Panel)

Drag and drop the desired node type from the Quick Add section in the left panel onto the canvas.

2. Import from Collections

Drag and drop datasets or code you've already created from the Collections tree in the left panel. This is the best way to increase reusability.

3. Context Menu

Right-click on an empty area of the canvas to open the menu, then select Add Dataset or Add Code.

4. Keyboard Shortcuts

Use the following shortcuts for quick actions.

- Add Dataset Node:

Ctrl(Cmd) + Shift + D - Add Code Node:

Ctrl(Cmd) + Shift + C

Node Types

Dataset Node

Serves as a data source or sink.

| Type | Description | Use Case |

|---|---|---|

| Delta Lake | Delta Lake table | Batch data processing, version control |

| Kafka | Kafka topic | Real-time streaming data |

| DDS | DDS interface | Real-time distributed system integration |

| REST API | HTTP endpoint | External API calls |

Delta Lake Node Settings

- Table Name: Delta Lake table path

- Schema: Column definitions (auto-inference available)

- Partition Key: Column for data partitioning

- Write Mode: Append, Overwrite, Merge

Kafka Node Settings

- Topic: Kafka topic name

- Broker: Kafka broker address

- Serialization Format: JSON, Avro, Protobuf

- Consumer Group: (For source nodes)

Code Node

Performs logic to transform or process data.

| Type | Language | Use Case |

|---|---|---|

| Python | Python 3.x | General-purpose data processing, ML model application |

| SQL | SQL | Data transformation, aggregation, joins |

Python Node Settings

- Script: Write Python code

- Package Dependencies: List of required pip packages

- Input Variables: Input dataset mapping

- Output Variables: Output dataset mapping

- Environment Variables: Runtime environment variable settings

# Python node example

import pandas as pd

# Load input data

df = input_dataset.read()

# Transform data

df['total'] = df['price'] * df['quantity']

df = df[df['total'] > 1000]

# Output

output_dataset.write(df)

SQL Node Settings

- Query: Write SQL query

- Input Table Alias: Reference input dataset as a table

- Output Schema: Define result schema

-- SQL node example

SELECT

category,

SUM(amount) as total_amount,

COUNT(*) as order_count

FROM orders

WHERE order_date >= '2024-01-01'

GROUP BY category

ORDER BY total_amount DESC

Connecting Nodes

Connect nodes to define the flow of data.

- Hover over the right handle (Output) of the upstream node.

- Click and drag to the left handle (Input) of the downstream node.

- An edge is created and data dependency is established.

Connection Rules

- Dataset Node → Code Node: Read data (source)

- Code Node → Dataset Node: Write data (sink)

- Code Node → Code Node: Pass intermediate results

When connecting code nodes and dataset nodes, input/output variables in the code can be automatically mapped to dataset IDs. (Check in the settings panel)

Node Management

- Move: Drag nodes to change their positions.

- Duplicate: Select a node and choose Duplicate from the right-click menu, or use

Ctrl+C,Ctrl+V. - Delete: Select a node and press

Deleteor choose Delete from the right-click menu. - Align: Select multiple nodes and use the Auto Layout button in the toolbar to organize neatly.

- Group Selection: Use

Shift+ drag to select multiple nodes at once.